You know that exhausting feeling of juggling five different tools just to run one marketing campaign. One tool for emails, another for social posts, one for ads, one for website analytics, and endless spreadsheets to track leads. At the end of the month, you are still left wondering which of these efforts really worked.

That was exactly where one of our clients found themselves.

Let me share how we helped them simplify their MarTech with a single, integrated solution.

From Scattered Tools to a Unified Platform

Initially, the customer focus was only on basic email campaigns. Messages went out, but results were not tracked. Sales and marketing were not connected, and opportunities were slipping through the cracks.

As their business grew, their marketing needs became more complex. They wanted a way to manage all activities in one place instead of relying on multiple disconnected tools.

That was when we introduced them to unified platform options that could manage CRM, email, automation, ads, social posts, and reporting in one tool. The platform we chose to manage the needs of this particular client was HubSpot.

Gaining Visibility into Customer Journeys

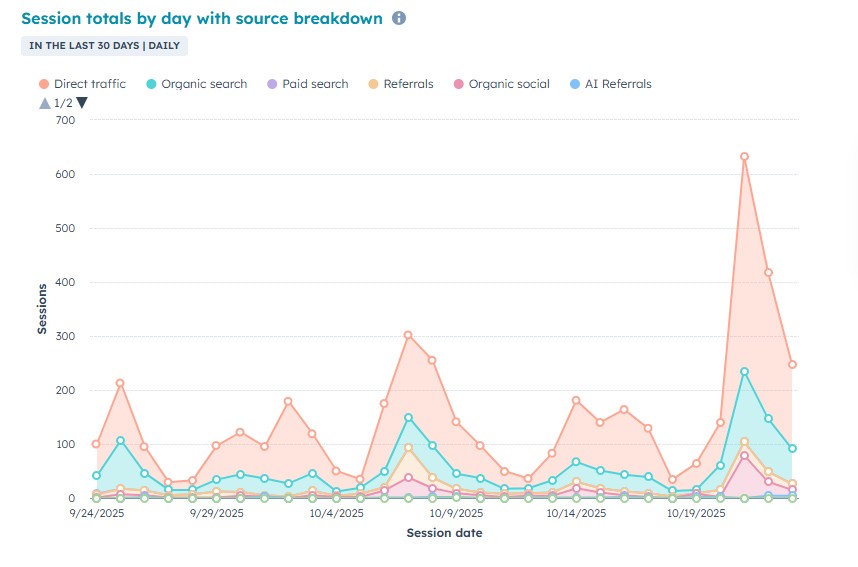

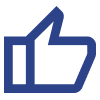

The first instance that our client saw the value of a unified platform was when we connected their website to HubSpot. Suddenly, with proper cookie tracking and GA4, they could see which pages visitors engaged with, how long they spent on the page, and which actions they took.

Instead of guessing what visitors cared about, they finally had real actionable data.

Turning Form Submissions into Actionable Leads

We then added forms that captured inquiries directly from the website and fed them into the CRM. This gave us clear visibility into where leads were coming from and how they were engaging.

Instead of scattered and unorganized contacts, we now had structured data. By segmenting these leads based on interests and behavior, we could personalize communications for each audience group and make every interaction more relevant.

Running Campaigns Without the Chaos

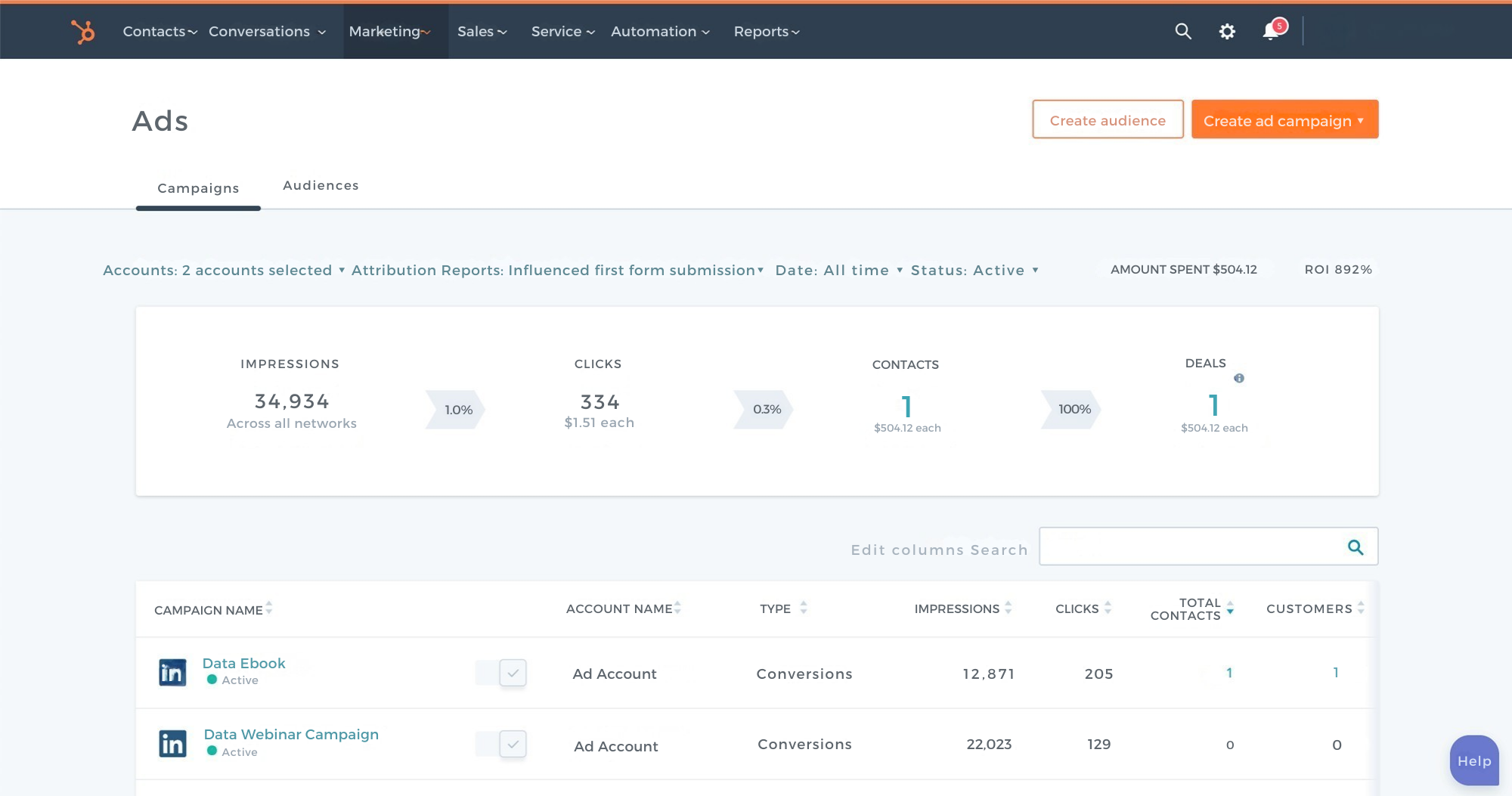

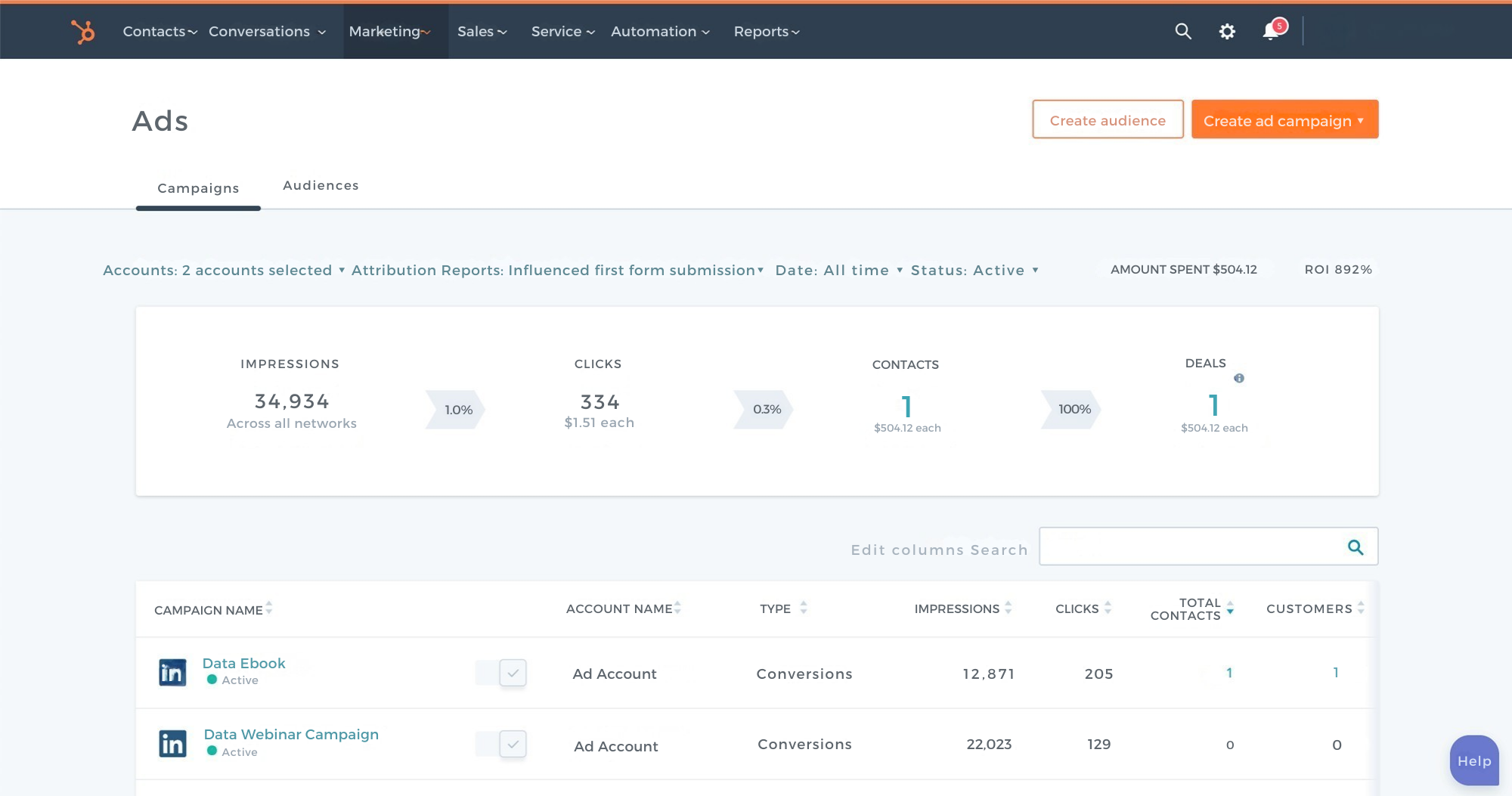

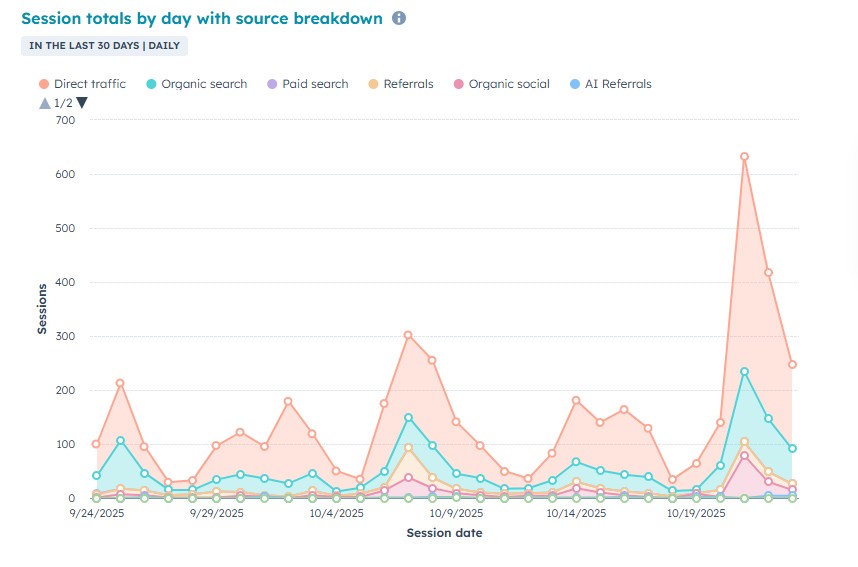

With segmentation established and known preferences, we managed emails and personalized communications, scheduled social posts, and ran ad campaigns directly from within the platform, eliminating the need to switch platforms or transfer data.

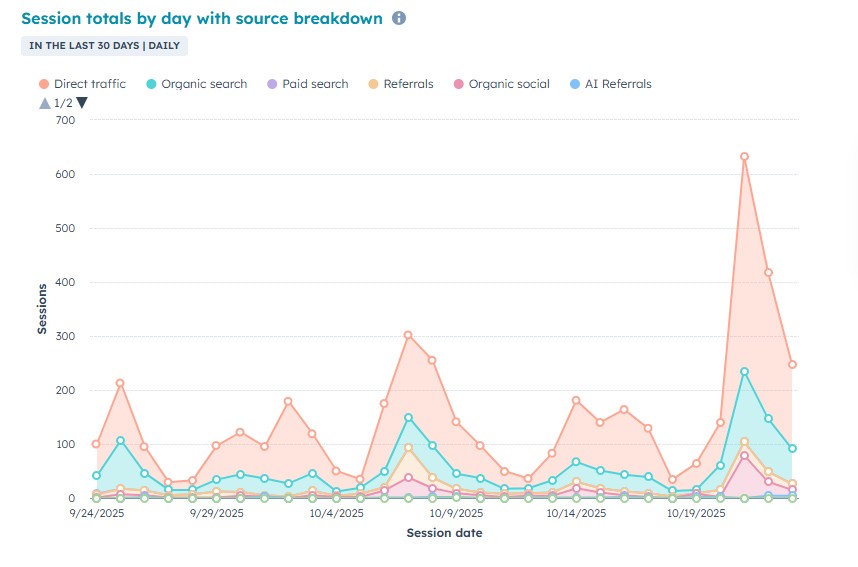

The best part? All the results were consolidated in a single dashboard. Engagement, reach, and CTRs were now easy to see and compare.

Automating Follow-Ups So Nothing Falls Through

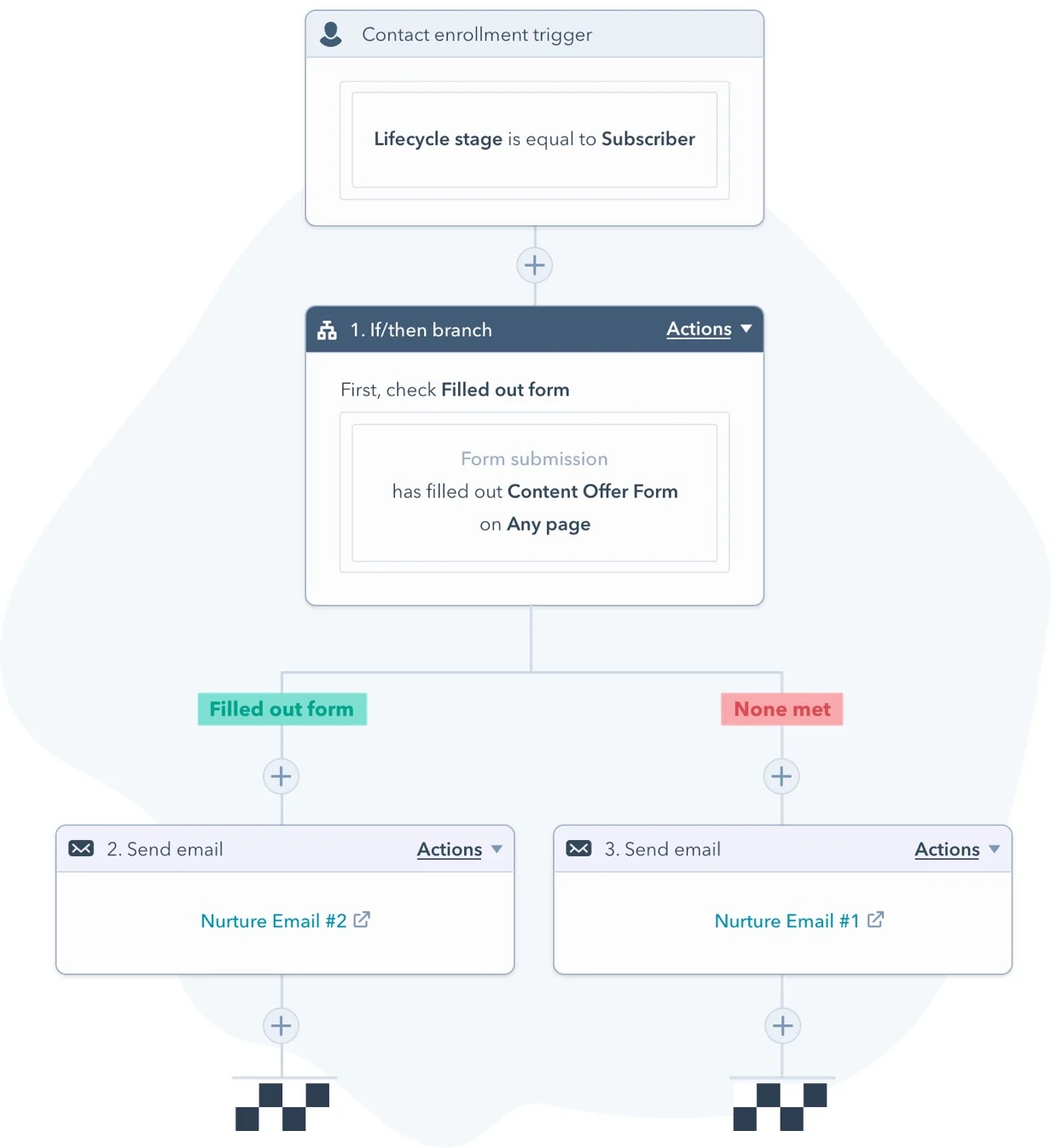

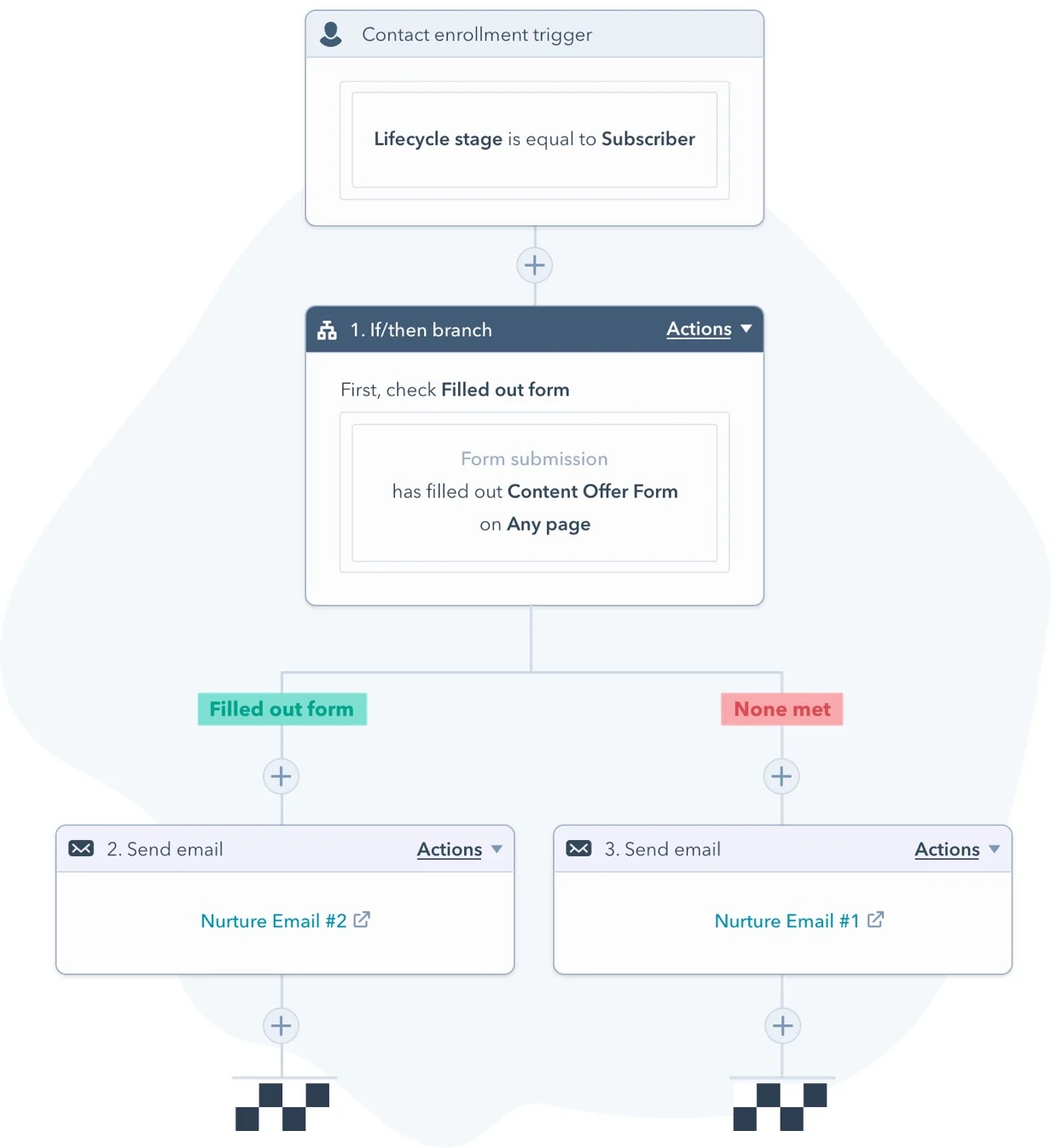

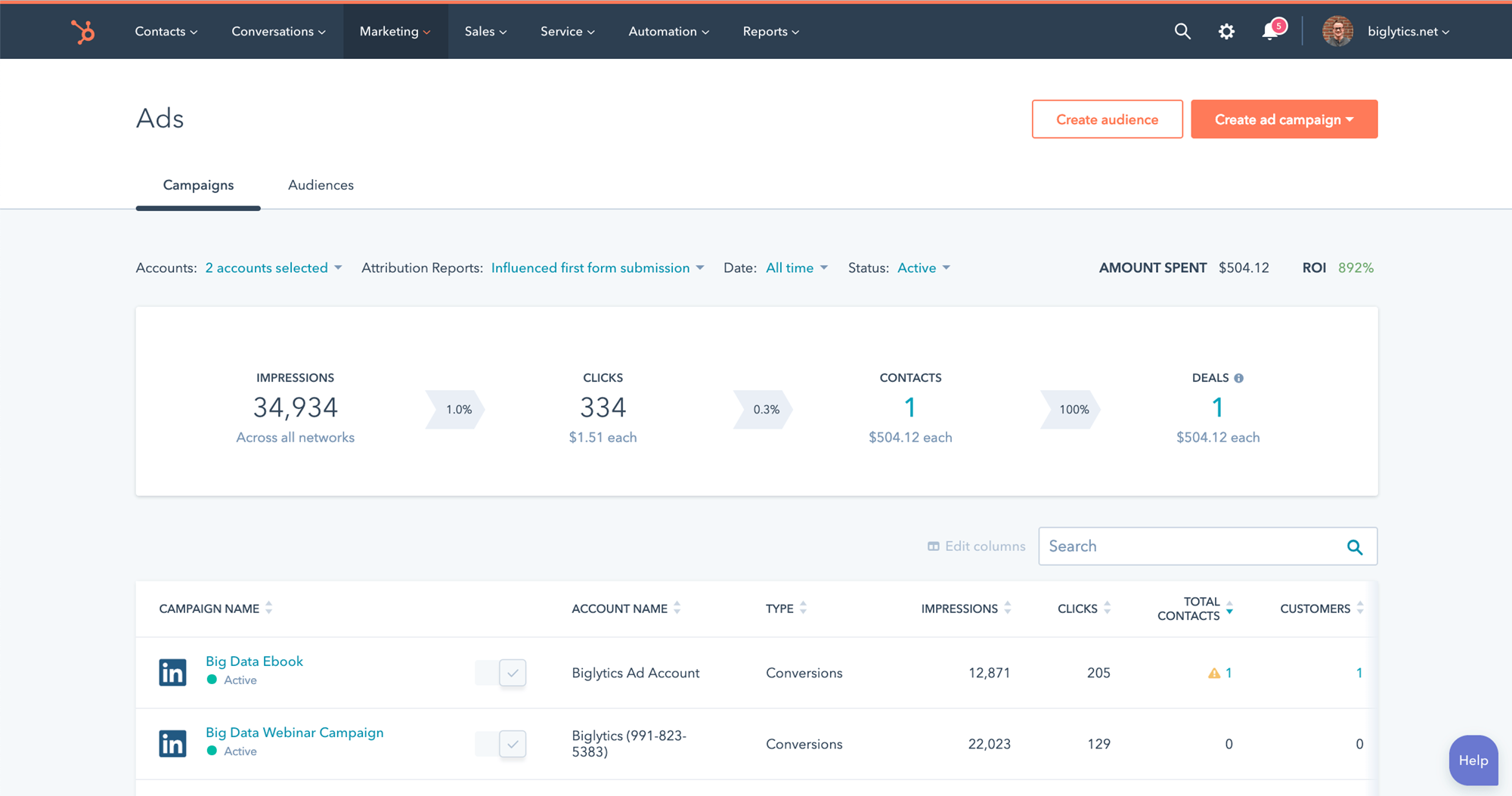

The next major improvement came with automated nurture workflows. The client could ensure that every lead received timely follow-ups without manual effort. For example, if someone downloaded a brochure from the site, an automated nurture email was sent a week later. Based on engagement, they either received additional resources or were retargeted with ads.

Follow-ups stopped being manual and started being automated, giving the team more insight and actionable data as well as freeing up precious time to spend on other projects.

Reports that Tell the Story

Every marketer wants to know if their effort is really paying off.

With reporting dashboards our client could finally see performance across email, social, ads, and web all in one place. No messy spreadsheets. No blind spots. It became easier to intervene and immediately obvious what was working and what needed adjustment.

What About AI?

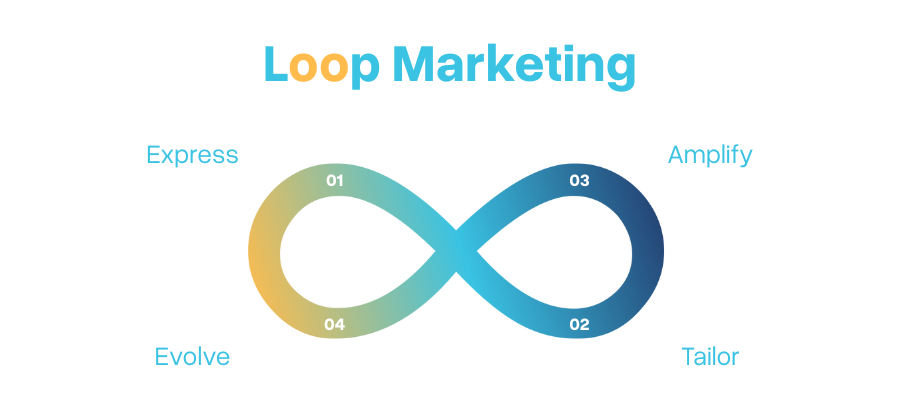

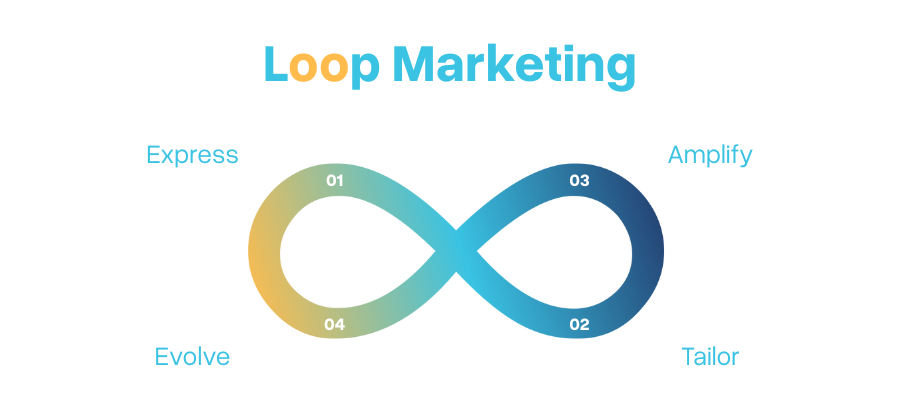

HubSpot continues to evolve. At INBOUND 2025, they announced “Loop Marketing,” which takes AI even deeper into the platform. Tools such as ChatSpot and Content Assistant now support writing emails, suggest social content, build dashboards, and even optimize campaigns.

It feels less like using software and more like working with a teammate.

Final Thoughts

This transformation answered our client’s biggest questions:

- Can it integrate with our website? Yes.

- Can it manage email, social, ads, and reporting in one place? Yes.

- Can it save time with automation and AI? Absolutely.

We work with a range of marketing automation and CRM platforms including HubSpot, Eloqua, and others to help businesses connect their marketing, sales, and customer data in one place.

HubSpot is one of the platforms we specialize in, but our experience covers several tools so clients can choose what fits their needs best.

As a HubSpot Solution Provider, we use HubSpot’s full suite of marketing and CRM tools to help our clients run smarter campaigns, nurture leads, and see measurable results.

We are HubSpot Marketing Hub software certified. Validating our expertise and strengthened ability to deliver measurable results for our clients.

Combining our semiconductor marketing experience with the latest MarTech tools has given our clients end-to-end expertise, helping them scale their marketing efforts more efficiently and cost-effectively.

Get results from HubSpot with our certified guidance. Contact us today.

0

Table of Contents:

You know that exhausting feeling of juggling five different tools just to run one marketing campaign. One tool for emails, another for social posts, one for ads, one for website analytics, and endless spreadsheets to track leads. At the end of the month, you are still left wondering which of these efforts really worked.

That was exactly where one of our clients found themselves.

Let me share how we helped them simplify their MarTech with a single, integrated solution.

From Scattered Tools to a Unified Platform

Initially, customer focus was only on basic email campaigns. Messages went out, but results were not tracked. Sales and marketing were not connected, and opportunities were slipping through the cracks.

As their business grew, their marketing needs became more complex. They wanted a way to manage all activities in one place instead of relying on multiple disconnected tools.

That was when we introduced them to unified platform options that could manage CRM, email, automation, ads, social posts, and reporting in one tool. The platform we chose to manage the needs of this particular client was HubSpot.

Gaining Visibility into Customer Journeys

The first instance that our client saw the value of a unified platform was when we connected their website to HubSpot. Suddenly, with proper cookie tracking and GA4, they could see which pages visitors engaged with, how long they spent on the page, and which actions they took.

Instead of guessing what visitors cared about, they finally had real actionable data.

Turning Form Submissions into Actionable Leads

We then added forms that captured inquiries directly from the website and fed them into the CRM. This gave us clear visibility into where leads were coming from and how they were engaging.

Instead of scattered and unorganized contacts, we now had structured data. By segmenting these leads based on interests and behavior, we could personalize communications for each audience group and make every interaction more relevant.

Running Campaigns Without the Chaos

With segmentation established and known preferences, we managed emails and personalized communications, scheduled social posts, and ran ad campaigns directly from within the platform, eliminating the need to switch platforms or transfer data.

The best part? All the results were consolidated in a single dashboard. Engagement, reach, and CTRs were now easy to see and compare.

Automating Follow-Ups So Nothing Falls Through

The next major improvement came with automated nurture workflows. They could ensure that every lead received timely follow-ups without manual effort. For example, if someone downloaded a brochure from the site, an automated nurture email was sent a week later. Based on engagement, they either received additional resources or were retargeted with ads.

Follow-ups stopped being manual and started being automated, giving the team more insight and actionable data as well as freeing up precious time to spend on other projects.

Reports that Tell the Story

Every marketer wants to know if their effort is really paying off?

With reporting dashboards our client could finally see performance across email, social, ads, and web all in one place. No messy spreadsheets. No blind spots. It became easier to intervene and immediately obvious what was working and what needed adjustment.

What About AI?

HubSpot continues to evolve. At INBOUND 2025, they announced “Loop Marketing,” which takes AI even deeper into the platform. Tools such as ChatSpot and Content Assistant now support writing emails, suggest social content, build dashboards, and even optimize campaigns.

It feels less like using software and more like working with a teammate.

Final Thoughts

This transformation answered our client’s biggest questions:

- Can it integrate with our website? Yes.

- Can it manage email, social, ads, and reporting in one place? Yes.

- Can it save time with automation and AI? Absolutely.

We work with a range of marketing automation and CRM platforms including HubSpot, Eloqua, and others to help businesses connect their marketing, sales, and customer data in one place.

HubSpot is one of the platforms we specialize in, but our experience covers several tools so clients can choose what fits their needs best.

As a HubSpot Solution Provider, we use HubSpot’s full suite of marketing and CRM tools to help our clients run smarter campaigns, nurture leads, and see measurable results.

We are HubSpot Marketing Hub software certified. Validating our expertise and strengthened our ability to deliver measurable results for our clients.

Combining our semiconductor marketing experience with the latest MarTech tools has given our clients end-to-end expertise, helping them scale their marketing efforts more efficiently and cost-effectively.

Get results from HubSpot with our certified guidance. Contact us today.

The New Frontier of Search: Is Your Brand Visible to AI?

.svg)